Guinea Fowl, Conditioner, and Google Lens

With AI photo recognition, you never have to "use your words" again.

👋 Hi, I’m Till and welcome to AI Anxiety, a weekly newsletter all about AI for theAI-avoidant. Let’s learn together.

Two Thursday’s ago, in the first-ever addition of AI Anxiety, we talked about using AI as a collaborator. As Chat GPT so artfully summarized it for me ➡️

“In the first issue of "AI Anxiety," titled "AI as a Collaborator, Not a Writer," Till Kaeslin discusses how AI, particularly Chat GPT, can be used as a collaborative tool in writing, rather than replacing the writer entirely. The newsletter emphasizes the importance of using AI as a partner in the creative process, especially for tasks where finding the right wording can be challenging … The newsletter aims to present AI as a helpful collaborator, easing fears of it replacing human roles.”

Another perspective that’s been gaining lots of steam recently is using AI as an assistant.

In OpenAI’s opening keynote for their Developer Day conference, held last Monday, AI assistance was one of the main focuses.

I’d definitely recommend you check out the opening keynote below. It can get a little technical, since it’s a conference specifically for developers, but it’s got some really interesting announcements (mostly about cool new things GPT can now do).

One of the new things GPT can do is personalize.

With GPT Plus - which costs $20 a month, for transparency sake - you can now create your own GPT.

You personalize it with natural, plain english, meaning you don’t need to know how to code in order to get your customized GPT assistant off the ground – you just have to tell it what you want it to do. Here’s an example from OpenAI:

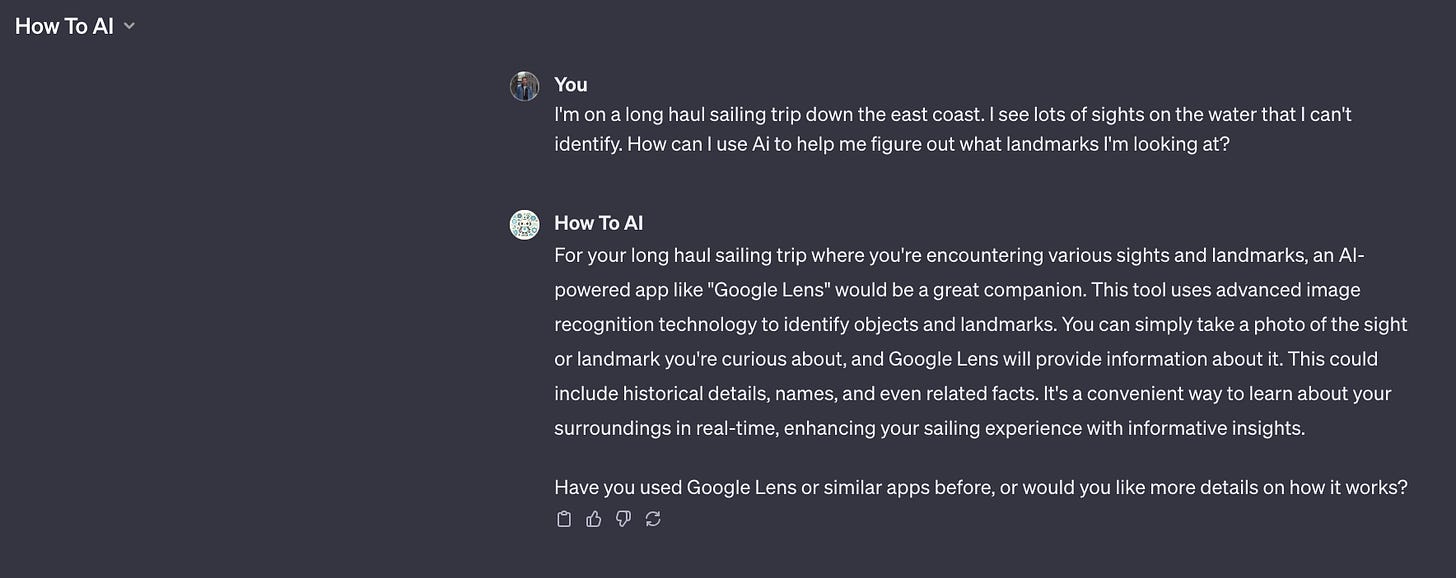

So I messed around and created How to AI, a GPT that can suggest ways to integrate AI into your everyday life – specifically tailored towards people like you and me, who aren’t already highly-technical AI enthusiasts.

When I was configuring it, it took some trial and error to learn what worked and what didn’t. After tweaking it a bit, I came up with the following parameters for my GPT:

Provide concrete examples of AI services and apps the user can try based on their question and needs

Follow up with users based to dig into what they need

Follow up with users to ask if the response was helpful, or if they're looking for something else

Only suggest AI powered services

Keep the answers concise

Only provide one option at a time, don't overwhelm the user with too many bullet points

*This is the exact text I fed it to get it to where it is now.

So how are we going to use this?

I was thinking of a way to use this that may be helpful, and I thought of my parents. They’re on a Substack-documented sailing trip right now - headed South down the east coast, with my pup Umi to keep em’ company.

You see lots of things on a boat trip that have a story worth digging into. Pelicans diving into the water for fish, old lighthouses in the distance, massive cargo ships floating by going … who knows where. For all the unanswered questions you might have on a trip like this, it used to be that you’d just have to say, “who knows?” or, at best, try and put what you’re seeing into words so that Google could find what you’re looking for.

My ‘How To AI’ GPT gave me another option – Google Lens.

I gave the GPT my scenario for context and then asked it what AI tool it could suggest to help me.

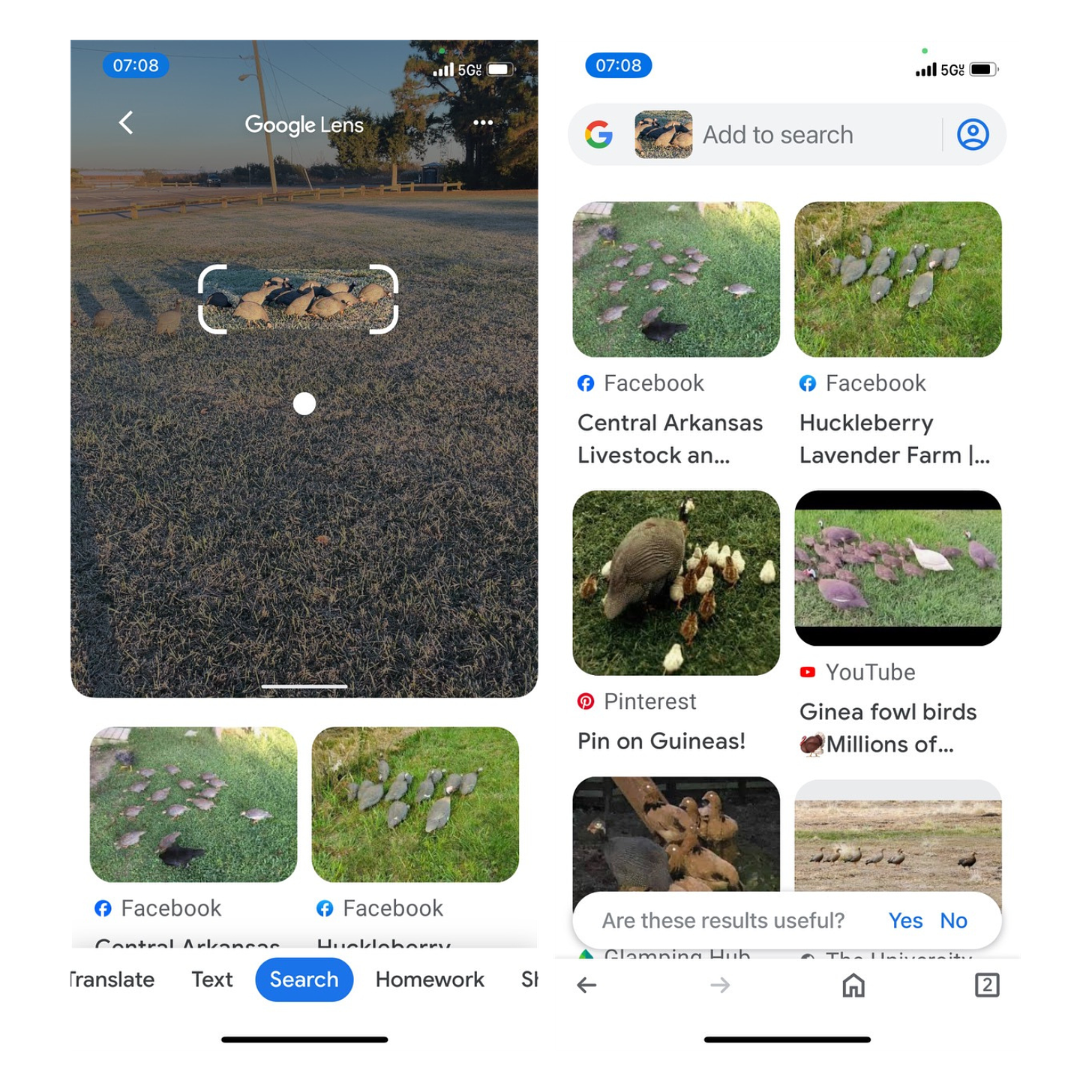

So I gave my parents some homework:

Use Google Lens to take photos of the things they see on their next day’s journey and report back what they learn.

Thankfully my parents are both A+ students and got back to me pretty quickly. Guinea Fowl on their … morning walk? I’m not actually sure where they saw these birds, but nonetheless, they used Google Lens to figure out what they were.

In real-time, they were able to hold their phone up, snap a picture of the group of strange birds, and figure out what they were looking at.

Now, I realize that identifying a (flightless?) bird on your morning walk doesn’t sound like a world-changing revelation in and of itself, but the technology and promise behind it could be.

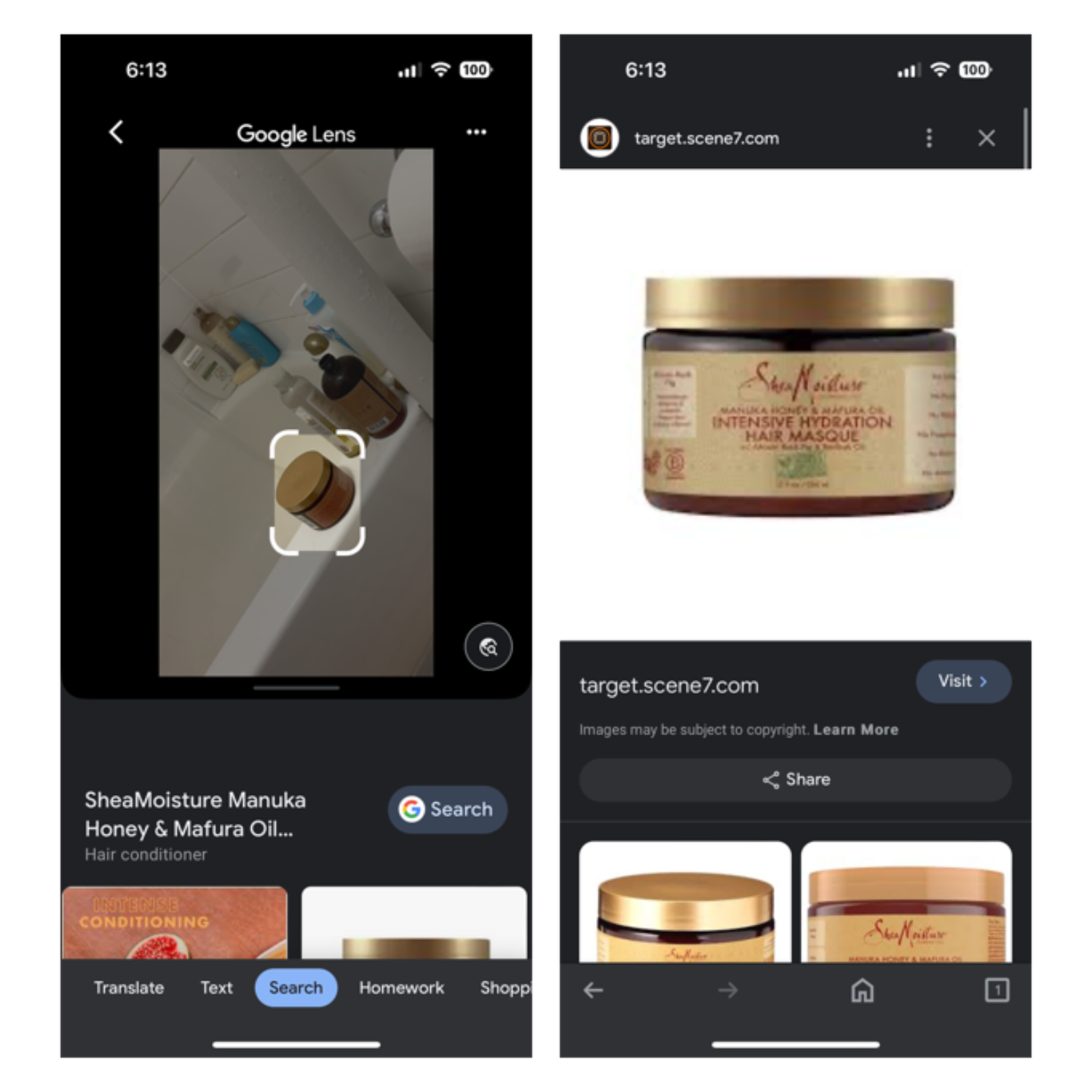

AI photo recognition means we’ll never again have translate what we see into words.

Think about it: As AI photo-recognition gets better and better – which it will – and our phones cling closer and closer to our sides - which they are - you’ll never have to “use your words” again.

“The Cafe with the red awning on 3rd street”

“That purple cake that tastes like vanilla”

“That tan collared shirt with the logo of a lamb on it”

We won’t need to describe locations we want our friends to meet us at, or desserts we’re hungry for, or clothes we want to buy. Just take a picture of it, and AI will give you the words; none of your own required.

Always in the know.

We’re kind of already there (or at least, not very far). Both Google and Chat GPT have AI photo recognition software, and I’m already impressed Google Lens didn’t think those Guinea Fowl were just a bunch of rocks.

Hell, I just used it to identify my conditioner, and I didn’t even have to turn the bottle over or get a nice picture.

This weekend, try taking some photos with Google Lens.

As you go about your daily life, get a feel for relying on AI as an assistant.

If you get back anything worthwhile - something impressive, or something completely off - let me know. I want to hear from you!

Fantastic Till! And agreed, rather impressive that Google Lens did distinguish these Guinea Fouls from rocks! I’ll definitely use Google Lens more often. And sorry for not sending you more examples, we were busily dodging shallow depths, channel markers, and shellfish traps ...